From MNIST to the real-world, why the trained CNN model not works?

The idea of deep neural networks has become more and more popular in recent years. Nowadays, many people are good at training DNN models at home or office, or school or labor, even on the way in traffic. Disappointing, not every model works well in the real-world, although these models have shown good performance in both validation and test sets.

we will in this story show a usual example with which many learners of Machine Learning and Deep Learning are just too familiar. That is the MNIST dataset, almost the “hello world” in Data Analysis, the first magic word in the AI era.

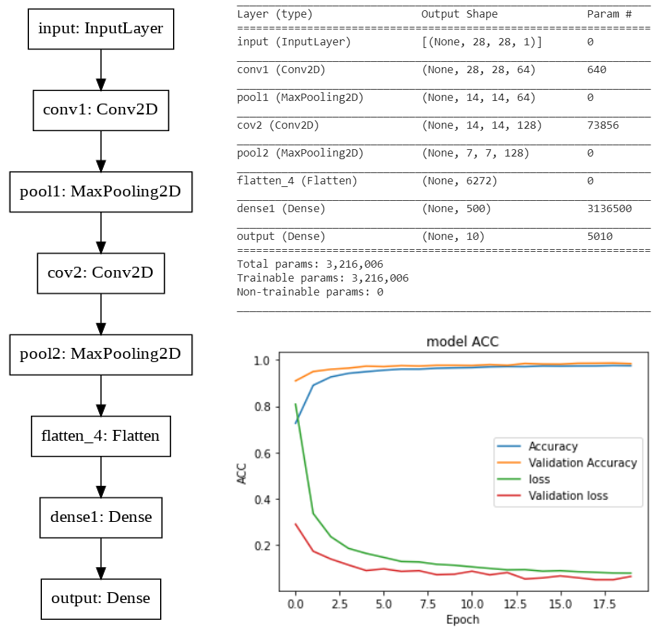

I trained a CNN model with TensorFlow/Keras. The code is relatively easy and we focus on the result of how the model works. Just take a look at the training history plot with follows.

Okay, it seems the model, not the best in Kaggle (I do not deserve it🙃), but the model definitive should work. Our train set shape (30000, 28, 28, 1), validation set shape (4000,28,28,1) and test set shape (5795, 28, 28, 1). Now that the model works well on the validation set. let us see how is that on the test set.

The accuracy of the test set is 0.9848, that of the train set is 0.9746 and the validation set is 0.9835. So far we can’t complain about anything.

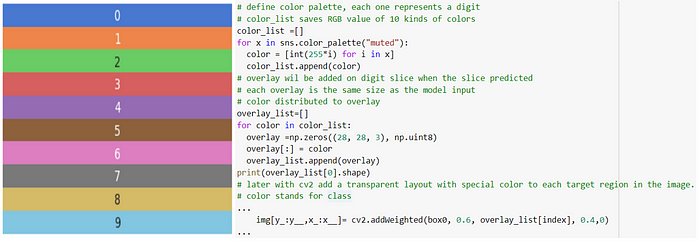

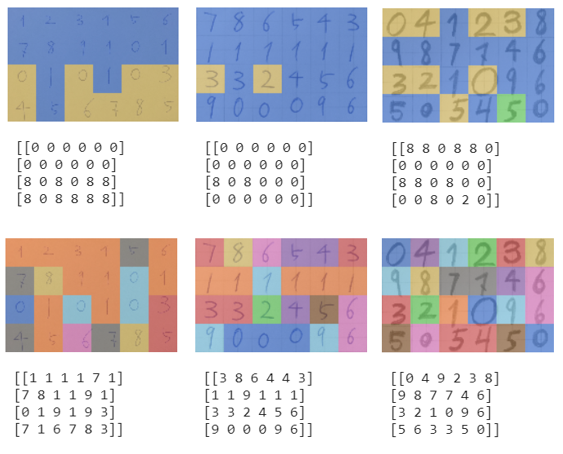

But when we apply the “perfect” model on real data. It doesn’t work anymore. Here we use sliding windows combined with our trained classification model to do the classification task of handwritten digits row by row and column by column. A raster scans an image from top to bottom and right to left, each time extracts a 28x28 slice as test data into our model. The model gives feedback to each slice to tell which digit it is. To make our experiment intuitive and visible, I add a colorful overlay on each predicted slice. The color depends on its label. The next plot illustrates the 0–9 class represented by each color and related code in python.

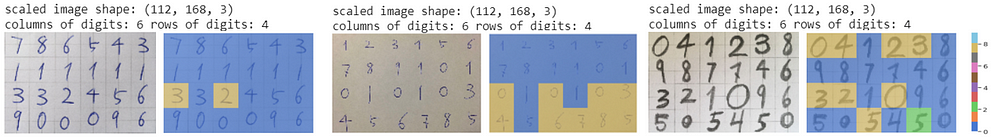

Happily let us see how good our model in the real-world is.

The result is very frustrating 😧😢😭. Although the model gives precious predictions on the validation and test phases and there are no signs of overfitting or underfitting, it couldn’t work on real data. In the third plot, I tried to imitate the handwriting of the MNIST dataset. I hope that it can make the task a little easy and help our model from another aspect.

Okay, let’s figure out why it is so.

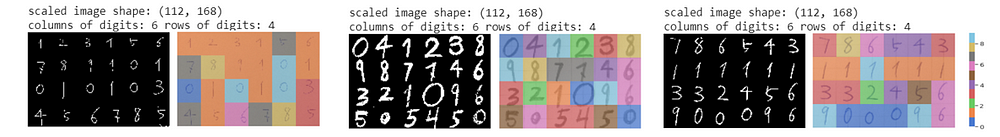

As we loaded the real-world images, we do the same things for these images like in train data’s preprocessing: resize/ reshape, convert RGB into gray value, nomination, etc. But we forgot something essential: data distribution. The real-world data after data preprocessing still has a different data distribution from the original dataset. In our case is that my handwritten digits are very different from MNIST. Here we plot the grayscale image of my handwritten and some digits on MNIST:

In layman’s terms, daily handwritten text is black on a white background, while MNIST text is white on a black background. In the grayscale, black is close to intensity 0 and white is close to 255. Therefore, the data distribution of both is widely divergent as if they were paths and courtyards. Since we finally found the reason, we must not train a new model with the same data distribution as our handwritten. We can eliminate the differences in our handwritten and MNIST with another image preprocessing method: Image Thresholding. If a pixel value is greater than a threshold value, it is assigned one value (maybe white), else it is assigned another value (maybe black). we use cv2.THRESH_BINARY_INV to make digits white and background black in our test images.

When we compare the model’s performance of the former (top) and the latter (bottom) in the next plot. Obviously, our model works much better after new preprocessing. Nevertheless, it can hardly ever reach such a good acc like in test set with 0.9848.

In Summary, we gave a specific example on MNIST to prove that DNN model (not only DNN models but all machine learning models) works well during training and testing, but also can fail in the real problem. It’s important that we adjust the real data and make them close to the train data. Or conversely, train a model with data very similar to real data.